RAG Evaluation with RAGAS and MLflow - A Practical Guide

A comprehensive tutorial demonstrating RAG evaluation using RAGAS metrics through MLflow integration. Learn to build a minimal RAG pipeline with LangChain,...

A comprehensive tutorial demonstrating RAG evaluation using RAGAS metrics through MLflow integration. Learn to build a minimal RAG pipeline with LangChain,...

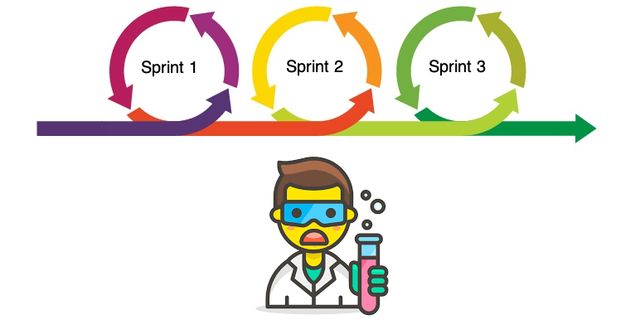

Shape Up offers a focused alternative to sprint-driven development, trading constant ceremonies and tight iterations for clear boundaries and six-week...

This article explores how to move beyond simplistic code coverage metrics to build truly comprehensive test suites using GitHub Copilot. Drawing from...

As developers, we often reach for full-scale graph databases when simpler solutions would suffice. When your knowledge graph is modest in size, keeping it...

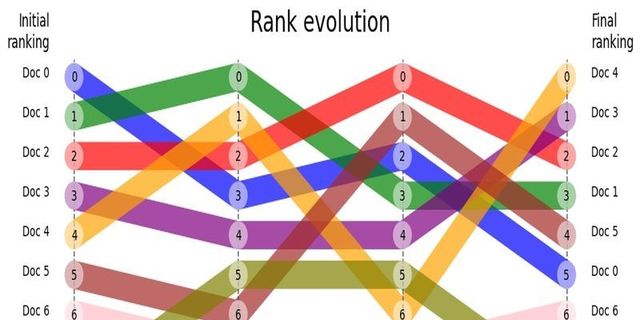

RAG systems depend on high-quality retrieval to surface relevant information. Analyzing how document rankings evolve through multiple re-ranking steps is...

Managing and monitoring the complex behavior of Large Language Models (LLMs) becomes increasingly crucial. LLMOps and LLM Observability provide essential...

Learn prompt discovery to uncover the most effective prompts and combinations thereof to achieve specific tasks, while also considering factors like...

This article discusses several advanced techniques that can be applied at different stages of the RAG pipeline to enhance its performance in a production setting.

This article explores distinguishing details of Markov Models and Transformer-based models like GPT, focusing on how they predict the next character in a...

Discover the delicate balance between Agile methodologies and imagination in the domain of data science and analytics. Uncover the impact of Agile...