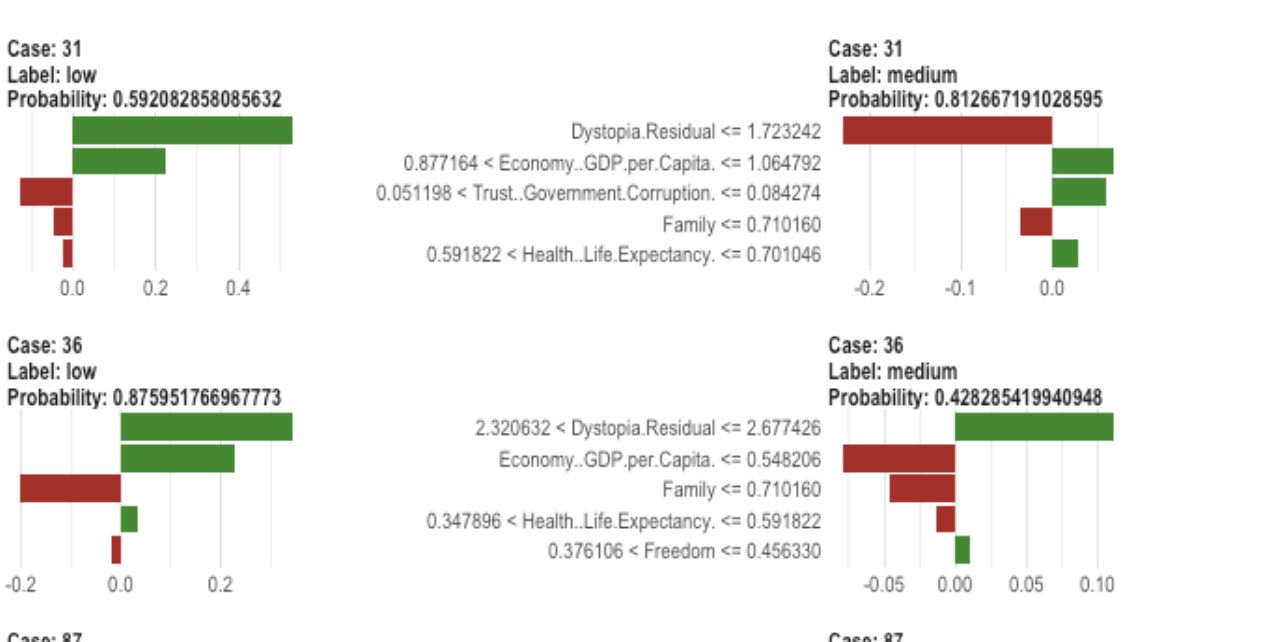

Attacking Differential Privacy Using the Correlation Between the Features

2023-04-19

Learn how the differential privacy works by simulating attack on data protected with that technique.

Continue reading